Abstract: Developing a universal and effective UAV low-altitude remote sensing image processing method is crucial for precise pesticide application and real-time agricultural monitoring using drones.

In the previous article, we discussed the use of 3D remote sensing technology in monitoring plant growth, pests, and diseases, as well as current research trends both domestically and internationally. Today, we will focus on how to use a low-cost visible light ultra-low-altitude remote sensing platform to extract and analyze agricultural data, providing a reference for accurate drone spraying and agricultural monitoring.

Advantages of UAV Ultra-Low Altitude Remote Sensing Technology

Currently, there is a serious issue of pesticide pollution in farmland in China. UAV low-altitude application technology combined with GPS can plan routes for spraying, but it only allows for full-area spraying without targeted application. In contrast, UAV remote sensing technology can quickly acquire high-resolution images and agricultural data from key areas, enabling more accurate crop management and reducing chemical inputs. This has become an essential tool in precision agriculture.

Compared to traditional remote sensing, multi-spectral UAV imagery is easier to collect, offers higher spatial resolution, and is cost-effective. When combined with UAV spraying, it provides new methods for precision agriculture, pest detection, and field analysis.

Vegetation Index and UAV Low-Altitude Remote Sensing Image Processing

Vegetation indices are widely used in remote sensing to reflect plant health and coverage. They are essential for analyzing parameters such as chlorophyll content, leaf area index, and biomass. Common vegetation indices include NDVI and RVI, which combine visible and near-infrared bands. However, these methods often require expensive equipment and have limited spatial resolution, making them less suitable for real-time field monitoring.

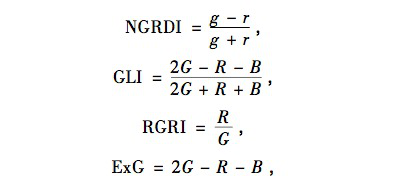

Visible light-based vegetation indices include NGRDI, GLI, RGRI, and ExG, among others. These indices are particularly useful for extracting vegetation information from low-altitude UAV images.

We conducted studies on image correction, vegetation index extraction, and crop region identification for ultra-low altitude visible light remote sensing images. Our goal was to develop a cost-effective UAV image processing method that supports precision pesticide application and farmland monitoring.

Research Process and Method

We corrected the visible light images captured by the UAV, analyzed their spectral characteristics, and calculated vegetation indices such as NGRDI, GLI, RGRI, and ExG. We then extracted and tested the vegetation information based on the index distribution and histogram analysis.

Step 1: Acquisition of Visible Light Remote Sensing Images

We used a custom-built electric quadcopter equipped with APM flight controller, OSD function, barometer, and a wide-angle visible light camera. It could display real-time altitude and had self-stabilizing pan/tilt and digital image transmission.

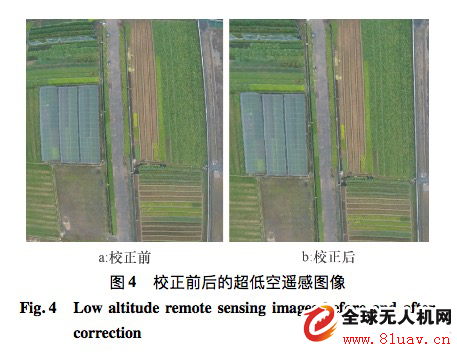

Step 2: Image Correction

Geometric corrections were performed using control points or feature-based methods. Due to unknown camera parameters and barrel distortion, we used the Zhang plane calibration method to obtain internal distortion matrices and correct the images.

Step 3: Visible Band Vegetation Index Calculation

The visible light band indices are calculated as follows:

R, G, B represent red, green, and blue band pixel values, while r, g, b are normalized values of the same bands.

Results and Analysis —

Which vegetation indices are best suited for extracting vegetation from ultra-low-altitude drone images?

We divided the experimental images into vegetation and non-vegetation categories. We selected 10 regions for each type and analyzed their statistical differences. The results are shown in Table 1.

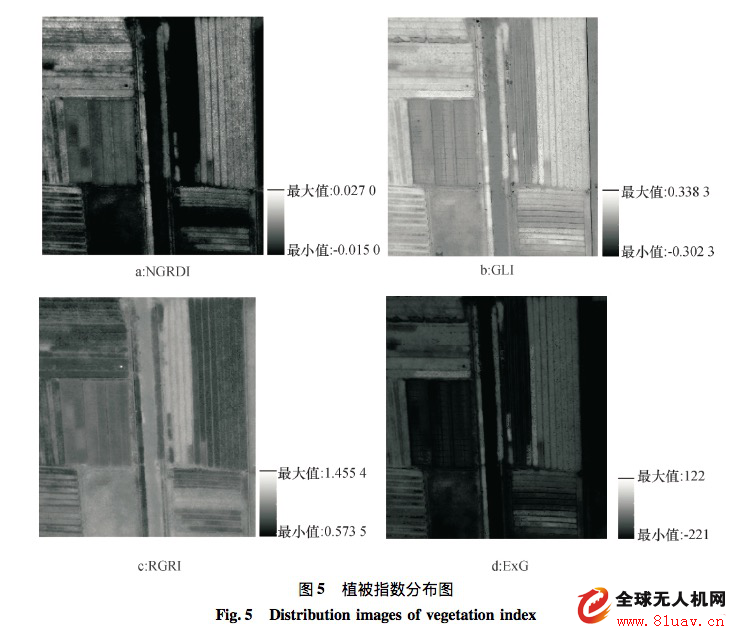

As shown in Table 1, for vegetation (crops), the green band pixel value > red band pixel value > blue band pixel value, consistent with healthy green plants' spectral characteristics. Using the visible light index formula, we calculated the vegetation index distribution map.

(Note: In the four vegetation index maps, darker RGRI hues indicate higher index values, while brighter NGRDI, GLI, and ExG hues indicate higher values.)

From Figure 5, it is clear that the GLI and ExG index maps show a significant difference between vegetation and non-vegetation areas. Vegetation appears bright white, while non-vegetation areas are darker. However, the NGRDI and RGRI index maps show similar gray values between vegetation and bare land, leading to confusion and lower classification accuracy.

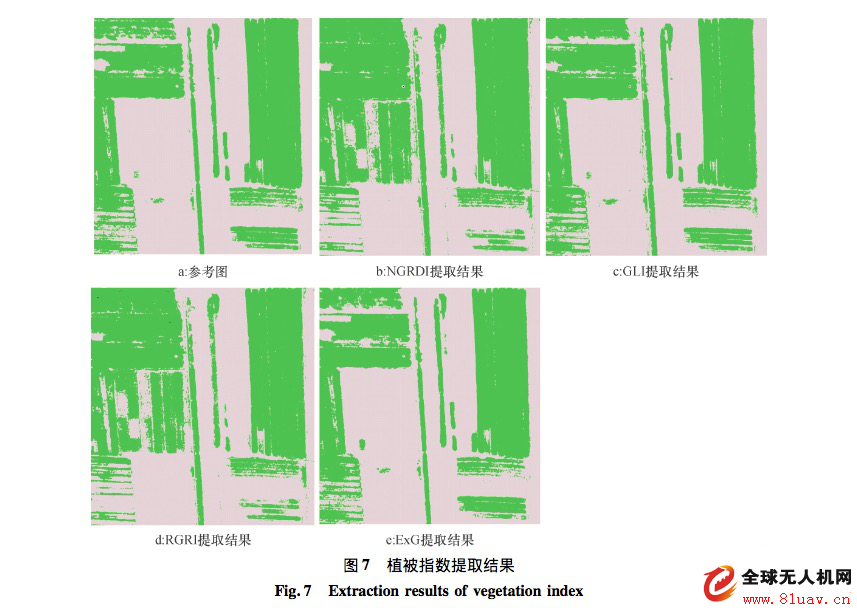

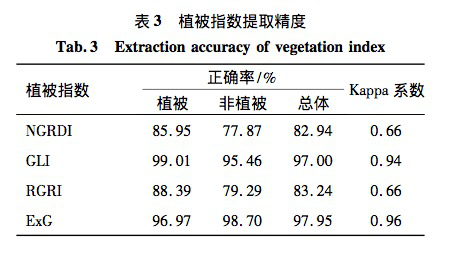

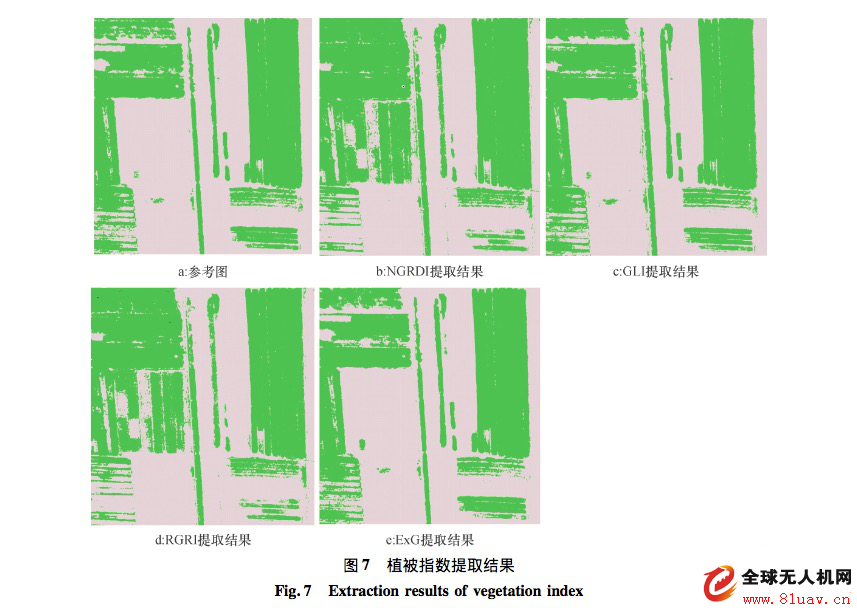

We also used thresholding to extract vegetation from each index map and evaluated the accuracy of each vegetation index (Table 3). The results are shown in Figure 7.

As shown in Table 3 and Figure 7, GLI and ExG showed the best performance, with over 97% overall accuracy, closely matching the reference image.

The NGRDI and RGRI index maps had more overlap between vegetation and non-vegetation areas, leading to lower accuracy in sparse vegetation and non-vegetation extraction. This suggests they are better suited for large-scale vegetation mapping rather than detailed analysis.

Compared to satellite imagery, ultra-low-altitude drone images offer higher spatial resolution, greater flexibility, and better timeliness. They have great potential in agricultural monitoring and precision farming.

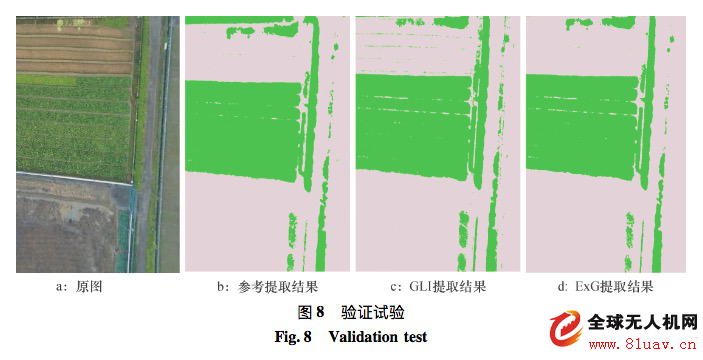

A series of experiments and verification results demonstrate that GLI and ExG have high extraction accuracy, exceeding 97%, making them ideal for vegetation extraction from ultra-low-altitude visible light drone images. This cost-effective image processing method delivers highly accurate vegetation information, with practical applications in precision agriculture, targeted spraying, pest monitoring, and agricultural analysis.

For those interested in learning more about the research process and methodology, here are two additional knowledge points:

1. Why do drone-captured images become distorted?

When a UAV captures images, factors like terrain variations and changes in flight orientation can cause geometric distortion. Compared to space or high-altitude remote sensing, ultra-low-altitude UAV images are less affected by Earth curvature, rotation, and atmospheric refraction, which can be ignored.

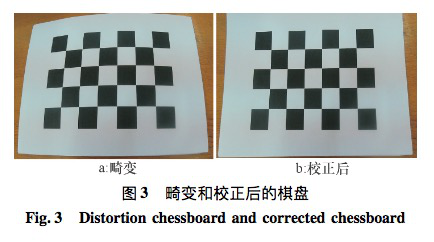

The main source of distortion in ultra-low-altitude UAV remote sensing images is geometric distortion caused by the camera lens and terrain fluctuations. In our experiment, the camera was not strictly calibrated, so the distortion mainly came from barrel distortion due to the wide-angle lens.

2. What is the Zhang Plane Calibration Method?

The Zhang plane calibration method is an offline camera calibration technique that requires accurate internal and external camera parameters. It involves capturing images of a planar calibration pattern from multiple angles and depths.

Using this method, you simply take standard checkerboard images from different angles and depths, calculate the internal camera parameters, and then apply them to correct the image.

In this experiment, we used a fixed paper board and captured 24 images at various angles and depths. Using the Zhang method, we calculated the camera's internal matrix and corrected the distorted images. The corrected image is shown in Figure 4. To ensure consistency in image size, we applied interpolation and adjusted the dimensions accordingly.

[Description] This article is reprinted from the column "Professional Road to Precision Agriculture," launched by Professor Lan Yubin, a leading figure in China’s agricultural aviation industry. Feifei College is a UAV technology and modern agricultural knowledge-sharing platform established by Extreme Flying Technology, offering both online education and offline training. It has gained significant influence in the field of modern agricultural knowledge services and continuously invites experts, scholars, and practitioners to share their insights. Currently, the platform has over 50,000 registered students, with more than 5 million article views and texts, helping 250,000 new farmers learn about drone technology and precision agriculture concepts.

Bluetooth Speaker,Outdoor Bluetooth Speaker,Wifi Bluetooth Speaker,Round Bluetooth Speaker

Comcn Electronics Limited , https://www.comencnspeaker.com