Author: Krishna Mallampati, PLX

Until now, the boundaries between PCI Express (PCIe) and Ethernet have become clear: PCIe is used for chip-to-chip interconnects; Ethernet is a system-to-system connection technology. There are good reasons for explaining why this boundary can be maintained for a long time. In any case, these two technologies have always coexisted. Although there are no indications that this situation will be completely changed, but more and more situations show that in the past Ethernet-dominated areas, PCIe is increasingly exposed, especially in the rack. Is PCIe really competing with Ethernet and winning?

Current architecture

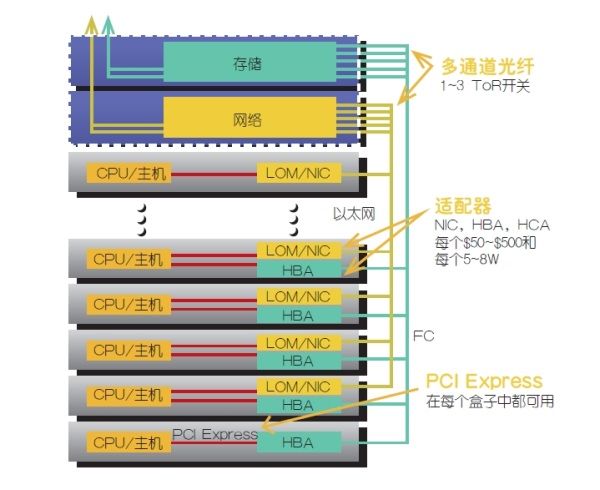

The traditional systems currently deployed in bulk have a variety of interconnect technologies that need to be supported. As shown in Figure 1, Fibre Channel and Ethernet are two examples of these interconnections (apparently there are more technologies - such as InfiniBand).

Figure 1: Example of a traditional I/O system currently in use.

This architecture has the following limitations:

* Coexistence of multiple I/O interconnection technologies

* Low input/output endpoint utilization

* Increased system power consumption and costs due to the need for multiple I/O endpoints

* When building the architecture, I/O is fixed and the flexibility is poor

* Management software must deal with multiple I/O protocols and require processing power

The achilles' heel of this architecture is the use of multiple I/O interconnect technologies because of the increased latency, increased cost, board space, and power consumption. This architecture can be useful if all endpoints are 100% working during this time. However, more often than not, endpoints are often idle, which means that users of the system have to pay for this limited utilization. The increased delay is due to the fact that the PCIe interfaces on the processors in these systems are converted to multiple protocols. (The designer can use the PCIe that comes with the processor and integrate all endpoints of PCIe to reduce system latency.)

Clearly, sharing I/O endpoints (see Figure 2) is an effective solution to these limitations. This concept is very attractive to system designers because it reduces cost and power consumption, improves performance and utilization, and simplifies design. Because there are so many advantages of sharing endpoints, so many organizations are trying to do so, such as the PCI-SIG issued a multi-Root I/O Virtualization (MR-IOV) specification to achieve this A goal. However, due to the combination of technical and commercial factors, even though MR-IOV has been released for more than five years, it has not been generally accepted as a specification.

Figure 2: A Traditional I/O System Using PCI Express for Shared I/O

Other advantages of shared I/O include:

* As the speed of I/O increases, the only additional investment required is to replace the I/O adapter card. In early deployments, when using multiple I/O technologies on the same card, designers will have to redesign the entire system while sharing I/O patterns and when upgrading a specific I/O technology. Designers can simply use the new card for the old card.

* Since multiple I/O endpoints do not need to exist on the same card, designers can either create smaller cards to further reduce cost and power consumption, or retain existing sizes and use more cards The space saved by the I/O endpoints differentiates its products by adding multiple CPUs, increasing memory, and/or adding other endpoints.

* Designers can reduce the number of criss-crossing cables in the system. Because multiple interconnect technologies will require different cables to support the bandwidth and overhead of the protocol. However, with the simplification of the design and the limited number of I/O interconnection technologies, the number of cables required for the normal operation of the system is also reduced, thereby reducing design complexity and saving costs.

Implementing shared I/O within a PCIe switch is a key enabler to implement the architecture described in Figure 2. As mentioned earlier, MR-IOV technology is not universally accepted. One common view is that it may never happen. to this end. The Single Root I/O Virtualization (SR-IOV) technology comes out on the rescue. To improve performance, it uses hardware to implement I/O virtualization and provides hardware-based security and quality of service (QoS) on a single physical server. characteristic. SR-IOV also allows multiple guest operating systems running on the same server to share I/O devices.

In 2007, the PCI-SIG released the SR-IOV specification to call for the division of a single physical PCIe device into multiple virtual functions, whether network interface cards, host bus adapters, or host channel adapters. Virtual machines can then use any virtual function, allowing many virtual machines and their guest operating systems to share a single physical device.

This requires I/O vendors to develop devices that support SR-IOV. SR-IOV provides the easiest way to share resources and I/O devices across different applications. The current trend is that most terminal manufacturers support SR-IOV, and more and more manufacturers will join this camp.

The many advantages of PCIe have already been listed above. The icing on the cake is that PCIe is a lossless construction at the transport layer.

The PCIe specification defines a robust flow control mechanism to prevent packet loss. At each "hop", each PCIe data is validated to ensure successful transmission. In the case of a transmission error, the packet is retransmitted - the process is done by hardware without any involvement of the upper layer protocol. Therefore, in a PCIe-based storage system, data loss and damage are almost impossible to occur.

PCIe provides a simplified solution by allowing all I/O adapters (10 Gigabit Ethernet, FC, or other) to move out of the server. With the virtualization support provided by the PCIe switch architecture, each adapter can be shared by multiple servers and a logical adapter is provided for each server at the same time. The server (or virtual machine on each server) can continue to access its own set of hardware resources directly on the shared adapter. This virtualization of implementation allows better scalability where I/O and servers can be tuned independently of each other. I/O virtualization avoids over-provisioning of server or I/O resources, which reduces cost and power consumption.

Table 1 provides a high-level overview of the cost of PCIe and 10G Ethernet; Table 2 provides a high-level comparison of the power consumption of both.

Table 1: Comparison of Cost Savings by PCIe and Ethernet

Table 2: Comparison of reductions in PCIe and Ethernet.

Price estimates are based on a wide range of industry surveys, and for ToR (top-of-rack) switches and adapters, it is assumed that their prices will vary by volume, availability, and depth of relationship with suppliers. These two tables provide a framework for understanding the benefits of using PCIe for IO sharing (especially by removing the adapter) in terms of cost and power consumption.

Of course, this raises the question of why comparison costs and power consumption are calculated in gigabytes per second, not on a per-port basis. The main reason is: Right now, for data center suppliers, the charging trend is based on the bandwidth used instead of the number of connections. PCIe provides about 3 times the bandwidth of 10G Ethernet and allows the supplier to make more profit. If someone has done a comparison (using the same number of ports to build the same system), it will come to the same conclusion: PCIe will More than 50% over Ethernet.

to sum up

This article focuses on the comparison of PCIe and Ethernet in terms of cost and power consumption. Of course, other technical specifications should also be compared between the two. However, designers are benefiting from this, as major processor vendors increasingly have built-in PCIe on their processors. With this next-generation CPU, designers can directly connect the PCIe switch to the CPU, reducing latency and component costs.

PCIe technology has become ubiquitous. The 3rd generation representative of this powerful interconnect technology (8Gbps per link) is not only capable of supporting shared I/O and clustering, but also provides system designers with an unparalleled tool to Make their design optimal and efficient.

To meet the needs of the shared IO and cluster segment markets, vendors such as PLX Technology are introducing high-performance, flexible, low-power, and small-footprint devices to the market. These switches are sculpted to accommodate the full range of applications mentioned above. Going forward, fourth-generation PCIe at speeds of up to 16 Gbps per link will only help speed PCIe technology into new market segments and expand these markets, while at the same time making it easier and more economical to design and use it.

Many global vendors have adopted this ubiquitous interconnect technology to support I/O endpoint sharing. As a result, system cost and power requirements are reduced, and maintenance and upgrade requirements are reduced. PCI-based shared I/O endpoints are expected to reinvigorate the multi-billion dollar data center market.

However, Ethernet and PCIe will still coexist, and Ethernet will be used for inter-system connections; PCIe will continue its advancement in the rack.

Often used for both interior and exterior Illuminated Acrylic Signs, these illuminated Neon Signage include everything your business might need from logo, products, open time, home & festival decoration, lighting and advertisement.

Safe & low voltage, low power consumption, because the light source is LED, so even in the case of 12V, it can work normally.

Illuminated Signage,Illuminated Acrylic Signs,Outdoor Led Illuminated Signs,Illuminated Signage Letters

Shenzhen Oleda Technology Co.,Ltd , https://www.baiyangsign.com